“AI is good, the problems of AI will be solved by AI and young people”

Wat will artificial intelligence be by 2050? This was the subject of a recent Hungarian-Dutch international study analysing the views of international media and ICT experts, published in early January in the prestigious specialised scientific journal AI & Society by colleagues from the Ludovika University of Public Service (Ludovika-UPS), Corvinus University of Budapest and the University of Amsterdam. The team of researchers – Katalin Fehér, Lilla Vicsek and Mark Deuze – first set up the survey with 42 participants, and of these, 25 also took part in a longer survey that lasted several weeks. In order to increase representativeness, the authors considered gender, length of professional experience, region of the world and discipline or industry in which the expert works.

Despite the diversity of the sample, the answers were surprisingly homogeneous: respondents are generally optimistic that AI will have a positive impact on society by 2050. They believe that the basic values and structures of society will not change, but that these will be reinforced, and that there is a good chance of greater prosperity, security and less inequality. While they see the problems to be resolved, they hope that these will be tackled by future generations, perhaps with the help of evolving artificial intelligence. They therefore look to the AI future confidently, while being professionally short-sighted and liability averse.

Brave new world?

The experts who responded predict that by 2050, AI will have an impact on all aspects of socio-political and everyday life, supporting human well-being and guiding human perception and communication. They believe that we will have access to democratically accessible, automated, personalised, unbiased and reliably interpreted information everywhere in the world, and that the ethical functioning of AI will be effectively achieved through regulation. They think that in two to three decades, AI could function as a replacement for people, substituting for conversations, removing language barriers, and helping with everyday routines. According to some respondents, it is possible to combine animal and plant communication with big data and smart systems, making nature a source for further development of artificial intelligence. Several respondents argued that religions could also become more open and tolerant if people increasingly communicate on AI-driven platforms. Survey respondents hope that AI will ultimately be in harmony with both society and nature, fostering creativity, promoting equity, inclusiveness and human rights.

Fight fire with fire – AI against the dangers of AI

However, the media and ICT experts interviewed also perceive that there is a lot of uncertainty about AI and that it could have drawbacks. Most notably, for example, that people will become dependent on machines, there will be a greater risk of outages, information wars may break out, inequalities may increase, smaller languages and cultures may be disadvantaged, and the carbon footprint of artificial intelligence may increase. They argue that human communication and freedom of expression, democracy could decline, and changes in the labour market could accelerate. The expectation is that artificial intelligence will not be able to change current socio-cultural values and norms on its own, but may amplify or repress them, leading to new forms of abuse and violence. However, it is believed that technology-driven misuse, misinformation, filter bubbles, information overload can be reduced – possibly with the help of artificial intelligence. In their view, this is a task for future generations, who already know what it is like to live with AI. They also believe in the power of NGOs and the arts to solve problems.

An explanation for trust in AI

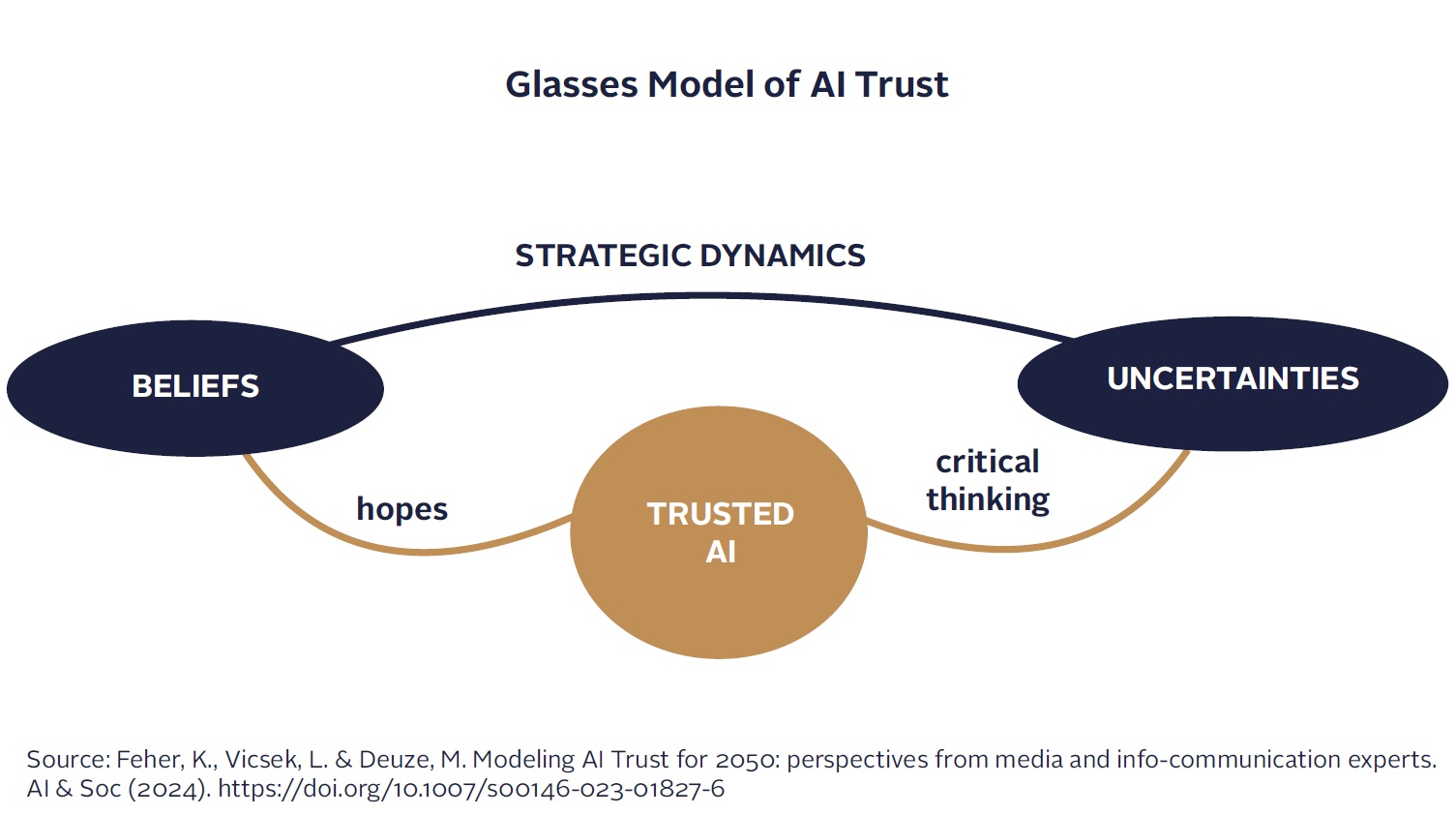

“We have set up a new model for interpreting the results, the so-called ‘trust in AI’ glasses model. This shows how the experts’ trust in AI is balanced (like glasses on the bridge of the nose) between two competing sets of concepts (through which we view the world, AI, as a lens). These two sets of concepts are made up of beliefs based on hopes, on the one hand, and uncertainties generated by critical thinking, on the other. Beliefs and uncertainties are included in the same (glass) frame, forming a strategic combination. Taken together, these represent a balanced view that the technology can ultimately be reliable if used responsibly,” explained Katalin Fehér, a researcher at Ludovika-UPS and first author of the study.

“Part of the reason for media and ICT professionals’ optimism about AI may be that their work leads them to see the distant future as a simple extension of the present. On the other hand, they do not want to look further ahead, especially because they often do not consider themselves responsible for shaping the future. They prefer to pass this on to future generations. It is through this lens that they distort their own vision of the future. They also tend to overlook the fact that artificial intelligence can take on their own role of media and ICT experts. This suggests professional myopia and cognitive dissonance,” said Lilla Vicsek, a sociologist at Corvinus University, co-author of the study.

The survey was conducted in the autumn of 2022, just before the burst of ChatGPT, generative artificial intelligence. At the very last minute to allow the researchers to ignore the fact that the participants may have used artificial intelligence to help them with their answers.